Provably Robust Conformal Prediction

with Improved Efficiency

Abstract

Conformal prediction is a powerful tool to generate uncertainty sets with guaranteed coverage using any predictive model, under the assumption that the training and test data are i.i.d.. Recently, it has been shown that adversarial examples are able to manipulate conformal methods to construct prediction sets with invalid coverage rates, as the i.i.d. assumption is violated.

To address this issue, a recent work, Randomized Smoothed Conformal Prediction (RSCP), was first proposed to certify the robustness of conformal prediction methods to adversarial noise. However, RSCP has two major limitations: (i) its robustness guarantee is flawed when used in practice and (ii) it tends to produce large uncertainty sets.

To address these limitations, we first propose a novel framework called RSCP+ to provide provable robustness guarantee in evaluation, which fixes the issues in the original RSCP method. Next, we propose two novel methods, Post-Training Transformation (PTT) and Robust Conformal Training (RCT), to effectively reduce prediction set size with little computation overhead. Experimental results in CIFAR10, CIFAR100, and ImageNet suggest the baseline method only yields trivial predictions including full label set, while our methods could boost the efficiency by up to 4.36×, 5.46×, and 16.9× respectively and provide practical robustness guarantee.

Overview of our paper.

What is Conformal Prediction (CP)

An example of conformal prediction from [1]

Conformal prediction has been a powerful tool to quantify prediction uncertainties of modern machine learning models. For classification tasks, given a test input \(x_{n+1}\), it could generate a prediction set \(C(x_{n+1})\) with coverage guarantee: $$ \mathbb P [y_{n+1} \in C(x_{n+1})] \geq 1-\alpha. $$

In conformal prediction, usually a (non)-conformity score function \(S(x, y)\) is defined, which measures how label \(y\) conforms to input \(x\). The prediction set is constructed as: $$ C(x) = \{y \mid S(x, y) \leq \tau \}, $$ where \(\tau\) is a threshold computed on a seperate calibration set, according to the target coverage level \(1-\alpha\).

Adversarial robustness of conformal prediction

Under adversarial attack, the coverage guarantee no longer hold. To rebuild the coverage guarantee, RSCP[2] is proposed to provide adversarially robust conformal prediction. RSCP proposed to apply conformal prediction on (non)-conformity scores: $$ \tilde S(x, y) = \Phi^{-1}[S_{\text{RS}}(x, y)] = \Phi^{-1}[\mathbb E_{\delta \sim \mathcal N(0, \sigma^2I)}S(x + \delta, y)], $$ where \(\Phi\) denotes Gaussian cdf. The randomized smoothed score \(\tilde S(x, y)\) is Lipschitz continuous with constant \(1/\sigma\), hence the score of adversarially perturbed example could be bound: $$ \tilde S(\tilde x, y) \leq \tilde S(x, y) + \frac{\epsilon}{\sigma}, $$ where \(\tilde x\) is the perturbed example that \(\|\tilde x - x\|_2 \leq \epsilon\).

However, RSCP still faces two challenges:

Challenge 1: The robustness guarantee of RSCP is flawed. RSCP

introduces randomized smoothing to provide robustness guarantee. Unfortunately, the derived

guarantee is invalid when Monte Carlo sampling is used for randomized smoothing, which is how

randomized smoothing is implemented in practice [3]. Therefore, their robustness

certification is invalid, despite empirically working well.

Challenge 2: RSCP has low efficiency. The average

size of prediction sets of RSCP is much larger than the vanilla conformal prediction.

Address challenge 1: RSCP+ framework

To address the technique flaw in RSCP, we proposed RSCP+, a novel framework to provide guaranteed robustness for conformal prediction. The key idea is utilizing the Monte-Carlo estimator directly as the conformity score in conformal prediction, and bound the score of perturbed examples. To be more specific, the bound contains three parts:

- Bound between \(S_{\text{RS}}\) of perturbed input and original input. This bound is guaranteed by randomized smoothing.

- Error from Monte Carlo approximation of perturbed input score, bounded by Hoeffding's inequality.

- Error from Monte Carlo approximation of clean input score, bounded by Hoeffding's inequality.

With this bound, we could provide guaranteed robustness with our RSCP+.

RSCP+ algorithm

Input: Input image \(x_{n+1}\), calibration set \(D_{cal}\), target coverage level \(1 - \alpha, \epsilon, \sigma, \beta\).

Output: Guaranteed robust prediction set \(C_\epsilon^+\).

- Calculate calibration scores with randomized smoothing \(\{\hat{S}_{\text{RS}}(x, y)\}_{(x, y) \in D_{\text{cal}}}\).\(\hat{S}_{\text{RS}}(x, y) =\frac{1}{N_{\text{MC}}} \sum_{i=1}^{N_{\text{MC}}} S(x + \delta_i, y), \delta_i \sim \mathcal{N}(0, \sigma^2 I_p)\) is the Monte Carlo approximation of \(S_{\text{RS}}\).

- Calculate threshold \(\tau_{\text{MC}}\) as \(\frac{1 - \alpha - 2\beta}{1 + 1 / |D_{\text{cal}}|}\) empirical quantile of calibration scores.

- Calculate test conformity score for each class with randomized smoothing \({\hat{S}_{\text{RS}}(\tilde{x}_{n+1}, k)}_{k=1}^K\).

- Calculate prediction set \(C_\epsilon^+\) with Eq. (17) in the paper.

Address challenge 2: Post-Training Transformation (PTT) and Robust Conformal Training (RCT)

To improve the efficiency of RSCP, we proposed two methods, Post-Training Transformation (PTT) and Robust Conformal Training (RCT).

Method 1: Post-Training Transformation (PTT)

PTT consists of two transformations on the base conformity score: (1) ranking transformation and (2) sigmoid transformation.

Ranking transformation. The ranking transformation utilizes a hold out set:

$$

\mathcal{Q}_{\text{rank}}(s) = \frac{r\left[s; \{S(x, y)\}_{(x, y) \in D_{holdout}}\right]}{|D_{\text{holdout}}|}.

$$

Sigmoid transformation. In this step, a sigmoid function \(\phi\) is applied on \(S\): $$ \mathcal{Q}_{\text{sig}}(s) = \phi\left[(s - b) / T\right], $$ where \(b, T\) are hyper-parameters controlling this transformation.

Method 2: Robust Conformal Training (RCT)

Besides PTT which is a post-training method, we propose a novel training pipeline called Robust Conformal Training (RCT). The key idea is to simulate the conformal prediction procedure during trainig. The training pipeline is shown in the figure below. The training objective is defined as: $$ L(x, y_{\text{gt}}) = L_{\text{class}}(c(x, y; \tau^{\text{soft}}), y_{\text{gt}}) + \lambda L_{\text{size}}(c(x, y; \tau^{\text{soft}})), $$ where the classification loss $$ L_{\text{class}}(c(x, y; \tau^{\text{soft}}), y_{\text{gt}}) = 1 - c(x, y_{\text{gt}}; \tau^{\text{soft}}), $$ and the size loss $$ L_{\text{size}}(c(x, y; \tau^{\text{soft}})) = \max(0, \sum_{y=1}^K c(x, y;\tau^{\text{soft}}) - \kappa). $$

Training pipeline of RCT.

Results

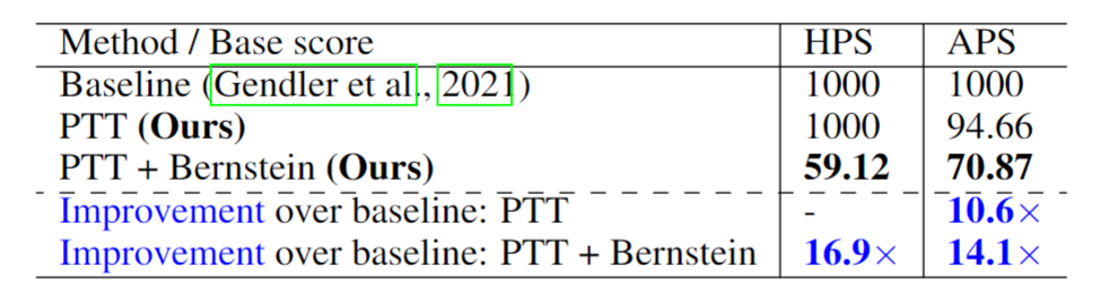

The tables below show the average size of prediction sets over three vision datasets. The baseline method gives trivial prediction sets (predict full label set) when trying to provide guaranteed robustness. With our methods, we provide the first meaningful, provably robust conformal prediction, with boosted efficiency by up to 4.36×, 5.46×, and 16.9× on CIFAR10, CIFAR100 and ImageNet, respectively.

Average size of prediction sets on CIFAR10 & CIFAR100

Average size of prediction sets on ImageNet

Related works

[1] Angelopoulos, Anastasios Nikolas, Stephen Bates, Michael Jordan, and Jitendra Malik.

"Uncertainty Sets for Image Classifiers using Conformal Prediction."

In International Conference on Learning Representations. 2020.

[2] Gendler, Asaf, Tsui-Wei Weng, Luca Daniel, and Yaniv Romano.

"Adversarially robust conformal prediction."

In International Conference on Learning Representations. 2021.

[3] Cohen, Jeremy, Elan Rosenfeld, and Zico Kolter.

"Certified adversarial robustness via randomized smoothing."

In international conference on machine learning, pp. 1310-1320. PMLR, 2019.

Cite this work

G. Yan, Y. Romano and T.-W. Weng, Provably Robust Conformal Prediction with Improved Efficiency , ICLR 2024.

@inproceedings{

yan2024provably,

title={Provably Robust Conformal Prediction with Improved Efficiency},

author={Ge Yan and Yaniv Romano and Tsui-Wei Weng},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=BWAhEjXjeG}

}

This webpage template was recycled from here.